Can You Do Both: Fast Scans and Fast Writes in a Single System?

Have you ever wondered why most existing database systems focus solely on either analytical or transactional performance? The data orientation within the file format and the internals of the storage engine are key reasons for this specialization. Current database systems are unable to balance transactional and analytical processing, and therefore, are forced to optimize for just one of both workload types. Transaction-focused OLTP systems use row-based storage formats for quick updates and lookups, but these formats are inefficient for analytics-focused OLAP tasks. OLAP workloads require scanning many rows while typically accessing only a few columns, and row-based formats are simply not designed to handle this. OLAP systems use compressed columnar formats for fast scans, which make updates complex and slow, often lacking efficient point lookup capabilities. For instance, a simple table scan using a column store can be more than 5x faster than storing data in a row-based format due to the data movement characteristics of the format.

Why Current Systems Focus on One Workload

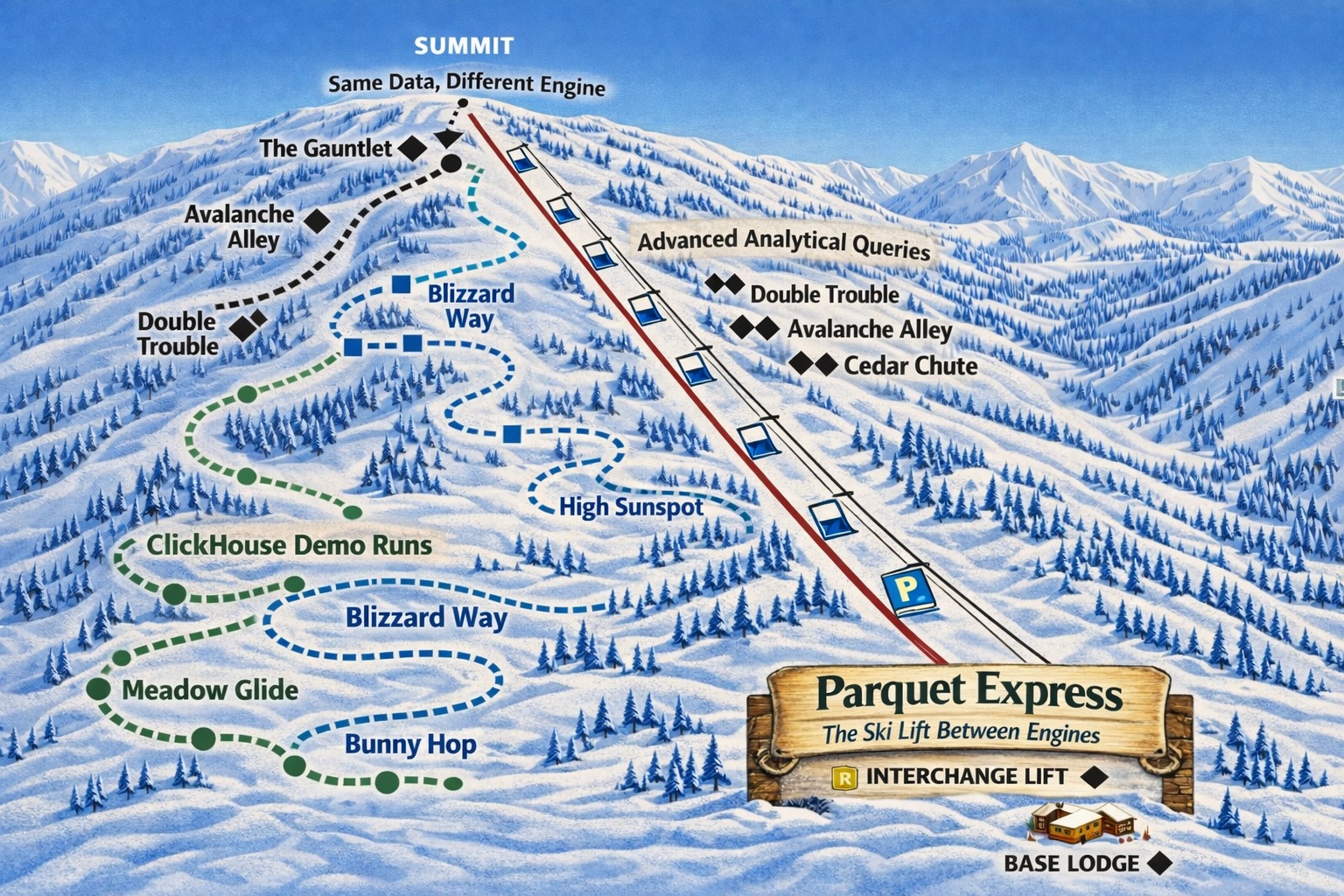

As shown in our schematic diagram below, OLAP and OLTP systems rely on different and often conflicting technologies. For example, index lookups in OLTP engines are often implemented as scans in OLAP engines, which significantly changes performance characteristics. Compression of data elements helps with sequential scans, but hinders in-place updates of records. As a result, today’s systems focus on one type of workload. To handle both workloads, current data stacks rely on multiple systems, but this often results in complex and brittle data pipelines, discussed in our first blog post.

Additionally, the characteristics of storage devices have a huge impact on the design and performance of systems. After all, most data sets do not fit into the main memory of the machines they run on. Therefore, it is crucial to understand the data-loading characteristics of two important storage trends: the rise of Solid-State Drives (SSDs) and the shift to cloud-based data processing. SSDs provide higher bandwidth and lower latency compared to Hard Disk Drives (HDDs), offering a cost-effective alternative for storage-heavy workloads. For more information on the fascinating topic of SSDs, you can read our previous blog post on SSD latencies.

When we look at cloud deployments, AWS offers multiple storage options, such as instance-local SSDs, replicated storage on instances in local VPCs (virtual private clouds), and object stores. These cloud technologies share similar characteristics as modern SSD RAIDs like high bandwidth and better latency than HDDs.

In this blog post, we will showcase how CedarDB is able to achieve outstanding performance on both workloads (“Hybrid Transaction and Analytical Processing”, HTAP) using a single unified storage system, called Colibri, that works for both SSD RAIDs and cloud environments. To bridge the requirements and properties of OLAP and OLTP, CedarDB relies on a hybrid storage engine, combining compressed columnar data for large scale analytics and row-based tuples for fast transactional performance on hot data. For more background on CedarDB’s novel hybrid storage engine, which we developed together with TUM, see the research paper that Tobias will present at VLDB'24 in late August.

To keep things focused, we will save the details of our cloud integration for a future post. However, if you’re eager for more content and technical details, feel free to dive into our paper, fittingly titled “Two Birds With One Stone: Designing a Hybrid Cloud Storage Engine for HTAP”.

Colibri: A Hybrid Storage Engine

The central component for handling HTAP workloads is our hybrid column-row storage engine that is able to manage hot and cold data in two different storage formats. In OLTP workloads, data access is typically focused on a small hot subset of the data. To efficiently support OLTP transactions, we store this hot data in an uncompressed, row-based format1. Cold data, which OLTP queries do not access frequently, is stored as large and encoded (i.e., compressed to allow processing without decompression) column chunks, an adaptation from data blocks. From this brief overview, you may be asking:

- How do we actually know what data is hot and what is cold?

- When do we move data from hot to cold (and vice versa)?

In the rest of this post, we’ll answer these two questions.

The Row Id B⁺-Tree

Our hybrid column-row store uses a B⁺-tree at its core, which is a widely used data structure in database systems to store values in a sorted order. We use the tree to organize all records based on their row id, which is an integer that is incremented for each new record. This gives us an implicit ordering by insertion time, which we can eventually use to detect hot records. Since traversing a B⁺-tree for every tuple can be expensive, we do not store one entry in the tree per tuple, but instead store blocks of records.

Given that hot tuples are usually newly inserted tuples (or updated cold tuples, which we will describe later), the hottest tuples are always associated with the highest row ids. Recent block additions to the tree are therefore stored uncompressed in a row-based format. Older entries that are no longer hot are periodically moved to a compressed columnar layout. Because these columns must be large enough to fully utilize the bandwidth of the storage devices, a compressed columnar block contains many more tuples than an uncompressed row-based block.

The figure above shows the different parts of our B⁺-tree. Inner nodes ① store pointers to leaf nodes and are ordered by row ids as keys ③. Leaf nodes ② store references to blocks ④/⑥, that can either be high cardinality columnar data blocks ⑤ or small row-based blocks ⑦.

To meet the performance objectives of CedarDB, it is essential to optimize this central data structure for concurrent access. We use an unconventional technique called optimistic lock coupling for our B⁺-tree, which offers high parallel performance and provides good code maintainability2.

With this B⁺-tree, sorted by row id, we can distinguish hot data from cold data. The second question was how to detect when hot data later becomes cold data or vice versa. To answer this, we first need to briefly discuss the famous five-minute rule. This rule of thumb provides guidance on the optimal time to swap out data items onto disks. In simple terms, the rule suggests that pages should be cached if they are used at least once every five minutes. While this concept remains relevant, we have observed several adaptations for newer hardware, effectively reducing the duration until items should be swapt. Our experiments (presented in the paper) indicate that servers with modern PCIe SSDs may benefit from a swapping duration between 10 and 40 seconds.

Making Data Storage Safe and Durable

A sane database system (not all do, unfortunately) must write logs to persistent storage before committing a transaction, known as write-ahead logging. This keeps your data safe and consistent by allowing the database system to always revert to the most recent committed state, even in the event of a catastrophic crash.

CedarDB’s hybrid storage solution employs two distinct logging paths, as our columnar format is immutable and aligns well with object storage requirements. All tuples within row-based blocks are subject to full logging at the record level. For the immutable data blocks, we only log the B⁺-tree operations, such as the creation of the block (the block file also needs to be persisted before committing the transaction) and marking tuples as deleted. Updates to these columnar data blocks are implemented as deletions and insertions, which also enables us to track hot tuples (a cold tuple is moved to the hot region again). Our paper presents several optimizations that are beyond the scope of this blog post.

CedarDB uses a timestamp-based multi-version concurrency control mechanism to guarantee transaction isolation. For mutable blocks, we maintain a version chain for each updated tuple. Further details and practical tips on our concurrency algorithm can be found in our docs.

Results

We designed our storage engine so that it performs well for transactional and for analytical workloads. In fact, our benchmarks show that it easily outperforms other popular database systems. We used the widely known benchmarks TPC-C (pure transactional workload) and the Ch-benCHmark, which is a combined transactional and analytical benchmark (HTAP) and builds on the idea of unifying TPC-C and TPC-H. All experiments were measured on an AMD Epyc 7713 server with 1 TiB of main memory and eight Samsung PM9A3 SSDs connected by RAID 0.

CedarDB’s hybrid storage in combination with multi-versioning provides the highest transactional throughput. DBMS Y, which only uses a pure column store, performs poorly for transactions, as expected. Here, PostgreSQL is already the best system we compare against, but CedarDB can still handle three times more transactions.

By the way, did you ever wonder why database papers only refer to DBMS X and Y and do not mention the actual name of the system? Unfortunately, many large traditional database vendors have a policy against naming in benchmarks, and as a small start-up, we can’t afford to be sued by multi-billion-dollar companies. This infamous policy is known as the DeWitt clause, which prevents fair competition and open research, and it’s long overdue that we get rid of such clauses.

Anyway, we will now examine more demanding hybrid workloads, having highlighted our pure transactional performance. We vary the number of analytical and transactional clients, and measure the skyline of how many queries were executed (y-axis) and how many transactions were committed (x-axis). Due to the vastly different performance numbers on combined workloads, we need a zoomed-in view to understand the competitors’ performance, clustered in the lower left corner. Both OLTP-focused systems (Postgres and DBMS X) report the same number of transactional queries as in the TPC-C experiment with 64 transnational clients but fail to process analytical queries. DBMS Y offers better analytical performance due to its column store, but is unable to process transactions efficiently. Finally, not only does CedarDB outperform transactional systems on transactional workloads and analytical systems on analytical workloads (by more than 3x in both categories), but it does so simultaneously!

Conclusion

So, can you do both in a single system? Turns out, if you carefully design your storage format and engine to deal with concurrent small writes and large scans, you can. You don’t even have to compromise on performance! Our column-row store effectively bridges the gap between these architectures by using optimized storage formats for separated hot and cold data. Join the waitlist now to be among the first to experience complex data analytics on up-to-date data with CedarDB.

Actually, we use small buffer pages that rely on a partition attributes across (PAX) format, which has row-store characteristics when loading data from external storage devices but stores data as little column chunks. So yep, we actually use a hybrid format inside our hybrid storage engine. ↩︎

Optimistic lock coupling was thoroughly tested and evaluated on adaptive radix trees by Leis et. al. This topic is interesting for many applications and data structures, so follow us on X or LinkedIn to get notified for future blog posts! ↩︎