The History of the Decline and Fall of In-Memory Database Systems

In the early 2010s, the drop in memory prices combined with an overall increase in the reliability of computer hardware fueled a minor revolution in the world of database systems. Traditionally, slow but durable magnetic disk storage was the source of truth for a database system. Only when data needed to be analyzed or updated would it be briefly cached in memory by the buffer manager. And as memory got bigger and faster, the access latency of magnetic disks quickly became a bottleneck for many systems.

Price development of main memory up until 2010. 1

A handful of companies and research groups seized the opportunity of cheap and reliable memory to get around this bottleneck. They broke with the 30-year tradition of buffer management and kept all data in memory instead. This allowed them to offer blazingly fast data processing in relational database systems such as Microsoft Hekaton, SAP HANA and HyPer, or key-value stores such as Redis. To maintain availability and durability despite the lack of durable data storage, they relied on a mix of failover to replicas and sequential writing of logs to disk.

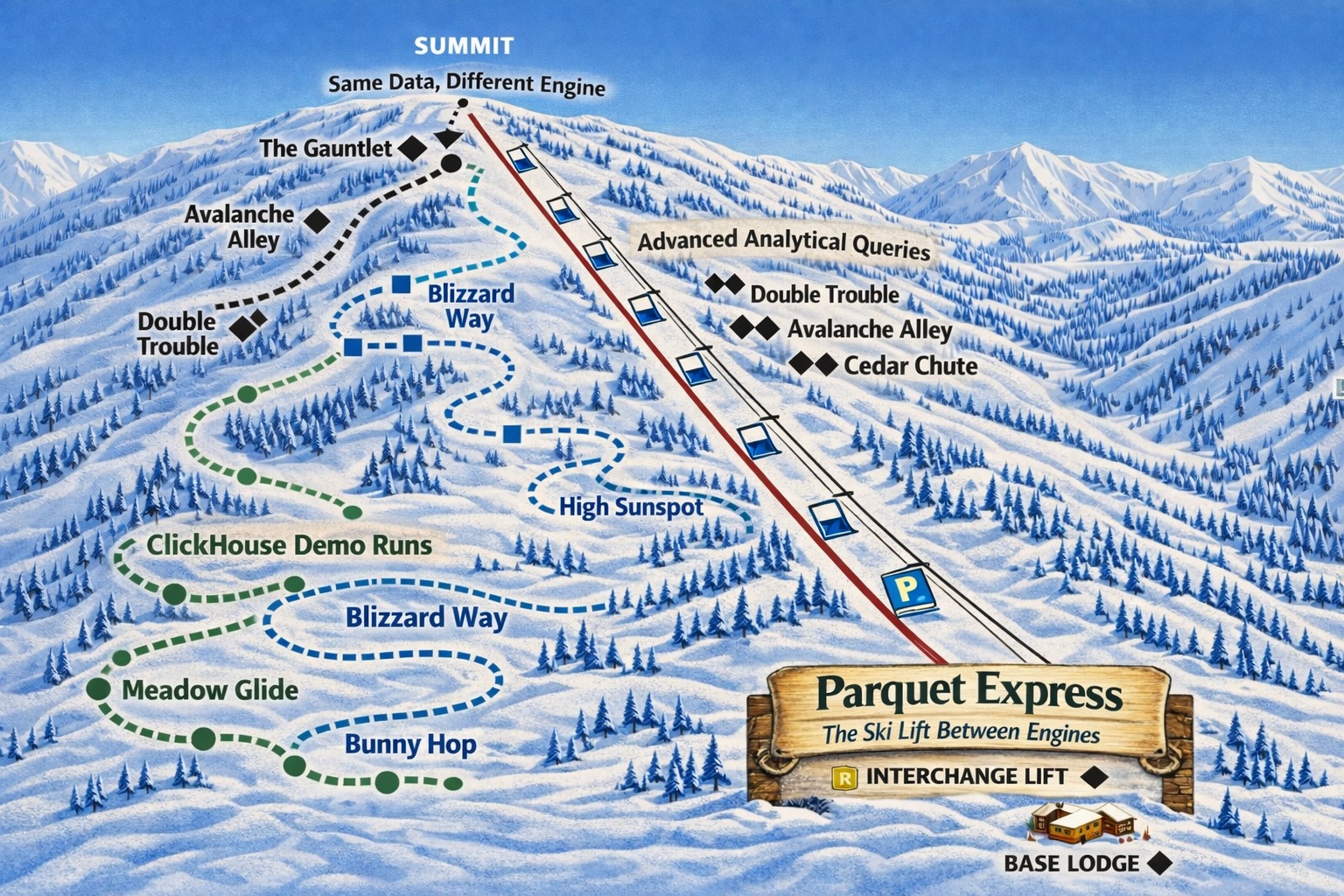

While in-memory systems have seen an almost meteoric rise, dominating all benchmarks, data analytics is now back on durable storage. All the major players, from analytical data warehouses like Snowflake, BigQuery and Redshift to HTAP systems like TiDB and SingleStore, no longer rely on in-memory alone. Even systems designed to be small in footprint and embedded in other processes, like SQLite and DuckDB, write their own on-disk format.

So what happened? Like its namesake, this article will examine the factors that led to their lack of widespread adoption and decline, whether it was a mistake to build them in the first place, and what we can learn from their history.

Why They Failed to Catch On

The potential advantages of in-memory systems are clear: they are simpler to build, easier to run, and blazingly fast. Even with all the learning from HyPer, we have only been able to outperform it meaningfully through better query optimization, not through different execution strategies. At least not when the data fully fits in memory, which is precisely the crux of the matter. Because if it doesn’t, in-memory systems will not work at all. All these in-memory systems were built under the assumption that the growth of reasonably priced memory capacity would continue to be exponential. The reality, however, looked different.

Price development of main memory continued. 1

Almost simultaneously with the release of in-memory database systems, the fall in RAM prices per gigabyte stagnated. At the same time, data growth has not slowed. Despite what the headline of Jordan Tigani’s infamous Big Data is Dead post might suggest, data continues to grow. Jordan, of course, does not dispute this and instead focuses on the growth of relevant or hot data in most organizations. But even cold data has to be stored somewhere. And if your only option for that is main memory, it is going to get expensive. Even if all your data is “only” 2 TB.

Of course, there are still people out there with more than 2 TB of critical data. Enough companies that AWS and SAP launched gigantic HANA-specific AWS machines with up to 32 TB of main memory for a whopping $400/h. In comparison, you can get a machine with 30 TB of storage and a comfortable 200 GB of main memory for less than $5/h. But for me, this price difference is not the main reason why main-memory database systems failed to capture a larger chunk of the database landscape.

Slow hard disks with even slower random access and expensive but fast RAM are no longer the only two options. In fact, the 30TB of storage for the machine above is SSD storage. While even top-of-the-line HDDs today can barely achieve 550 MB/s sequential read throughput, modern SSDs can reach 8 GB/s. Don’t even get me started on their vastly superior random access performance. SSDs were just starting to enter the end-user segment in 2010 when in-memory systems were conceived. They are now a commodity. And in contrast to main memory, prices per GB for SSDs kept dropping.

Price development of SSDs and main memory compared. 1

By eliminating the buffer manager altogether, in-memory systems deprived themselves of the ability to adapt to the benefits of this new class of storage. This is not to say that SSDs don’t have their own set of problems, or that buffer managers weren’t due for an overhaul. But even for the new reality of cloud storage, blob object storage like S3, buffer managers remain a vital component of a tiered storage hierarchy. Did all the effort that went into building in-memory database systems go to waste?

Were They a Mistake?

Those who are familiar with Betteridge’s Law of Headlines have probably already guessed my opinion on this question. For the rest of you, I don’t think that building in-memory systems was a waste of time and resources just because the assumptions and hopes held at the time of their inception didn’t come true. Just as the Roman Empire left traces all around modern-day Europe and the Mediterranean, and apparently the minds of men everywhere, in-memory systems still have a significant impact on data processing today.

The very reason why we cannot beat in-memory systems like HyPer in performance alone is that we rely heavily on the algorithms they devised. Their biggest gift is the idea that memory is no longer a second-class citizen in the form of a small and short-lived cache, but an ample resource to keep both database and query state. Many of the optimizations we use in CedarDB are unthinkable without ample memory. Being able to keep at least all query state in memory allows our join and aggregation algorithms to no longer be optimized for page-granular sequential access, but instead can rely on fast random access. While there are advantages for many algorithms, I want to focus on one of our workhorses, hash joins.

The beauty of hash tables is that they turn O(n * m) block-nested loop joins or O(n * log(n)) sort-merge joins into an O(n) operation,

which requires a single hash table lookup for each tuple on the probe side.

Of course, these properties are also true in a disk-based system, but there are reasons why PostgreSQL prefers the former two join algorithms in many cases.

Hash joins rely heavily on random access, which is prohibitively expensive on traditional disks.

So even though hash joins are always better from a theoretical point of view, in practice O(n) means that the runtime is bounded by C + f * n, where C and f are constant factors.

For a hash join, f includes the much higher random access cost, while for a sort merge join it includes only the cheaper sequential access cost.

This difference can even mask the logarithmic component of the sort merge join, making it effectively cheaper for disk-based workloads. However, once the data fits in memory, the difference between a sequential and a random access is miniscule enough to drop out of the equation, and the algorithmically better hash joins will be the superior choice.

Also, just because in-memory systems did not achieve greater market dominance does not mean that they are completely irrelevant today. Many of the original in-memory systems are still around today. SAP HANA powers countless ERP solutions, Redis continues to be one of the most popular key-value stores, Hekaton is available as an optimization path in Azure SQL, and HyPer has its place at Salesforce. So while it was certainly not a mistake to build them, they were just not the all-encompassing solution they were hoping to be.

What to Learn from Them

Of course, just because building in-memory systems was no mistake does not mean that none were made when building them. Similar to how the Brits looked at the Roman Empire rather unsuccessfully to avoid making the same mistakes themselves, we want to learn from the mistakes, and also the successes, of in-memory database systems.

At least for me, the biggest mistake of in-memory database systems was to assume that they had found the one and only solution for data storage. Even in the 7 years we have been working on CedarDB, storage trends have changed drastically. While CedarDB initially targeted only SSDs, non-volatile memory became a thing only to vanish entirely within just a few years. And that’s not even the most significant change. The storage reality shifted from locally attached disks to cloud-based blob object storage. But because a buffer manager provides the necessary abstraction to the storage layer, we have been able to easily adapt. So even if the current storage reality is S3 and the like, the important lesson is to be prepared for that to change again.

Learning from their success is learning to be bold. Without treating main memory as a first-class citizen, we could still cling to sequential, disk-optimized algorithms. Even with all the learnings of in-memory systems and beyond available, systems that were built for disk are still optimizing for them. For example, even in 2024, PostgreSQL defaults to 4 MB of main memory for query processing, with a gracious 8 MB for hash tables. For reference, the smallest DDR5 memory DIMM that I can find has 8 GB. Even Apple now ships its MacBooks with 16 GB without a $200 upcharge. So the second lesson is to embrace new possibilities, even if some of them may not pay off in the end. Otherwise, no matter how much progress hardware engineers make, you’ll be stuck in the 90s.

Summary and Outlook

So what did we learn from the story of in-memory database systems? For one thing, main memory capacity is now enough to fit a significant share of data, allowing us to rethink data processing algorithms. Also, it is important to try to take advantage of new trends in computer hardware so as not to get stuck with the performance and capabilities of the current generation. However, the painful lesson is that committing to a single technology is a huge gamble that is unlikely to pay off. So it is important to be prepared for future changes in all components of the system, from the storage layer to address the cloud, to join algorithms for time series, to completely different data models.

Is one of the lessons that buffer managers are here to stay? Who knows what the future holds. Right now there is a lot of buzz around CXL as the next big thing to replace the current brand of cloud object storage. And at least for CXL, having different tiers of storage that need to be managed by a component not entirely unlike a buffer manager is part of the core idea. Regardless of whether CXL takes off, and whatever other trends the future brings, building a modular system that allows components like the storage tier to be easily swapped out seems like the best way forward. If you want to be among the first to see the possibilities of such a modular system, be sure to join our waitlist!

Data from: John C. McCallum (2023); U.S. Bureau of Labor Statistics (2024) – with minor processing by Our World in Data. “Memory”. John C. McCallum, “Price and Performance Changes of Computer Technology with Time”; U.S. Bureau of Labor Statistics, “US consumer prices”. ↩︎ ↩︎ ↩︎