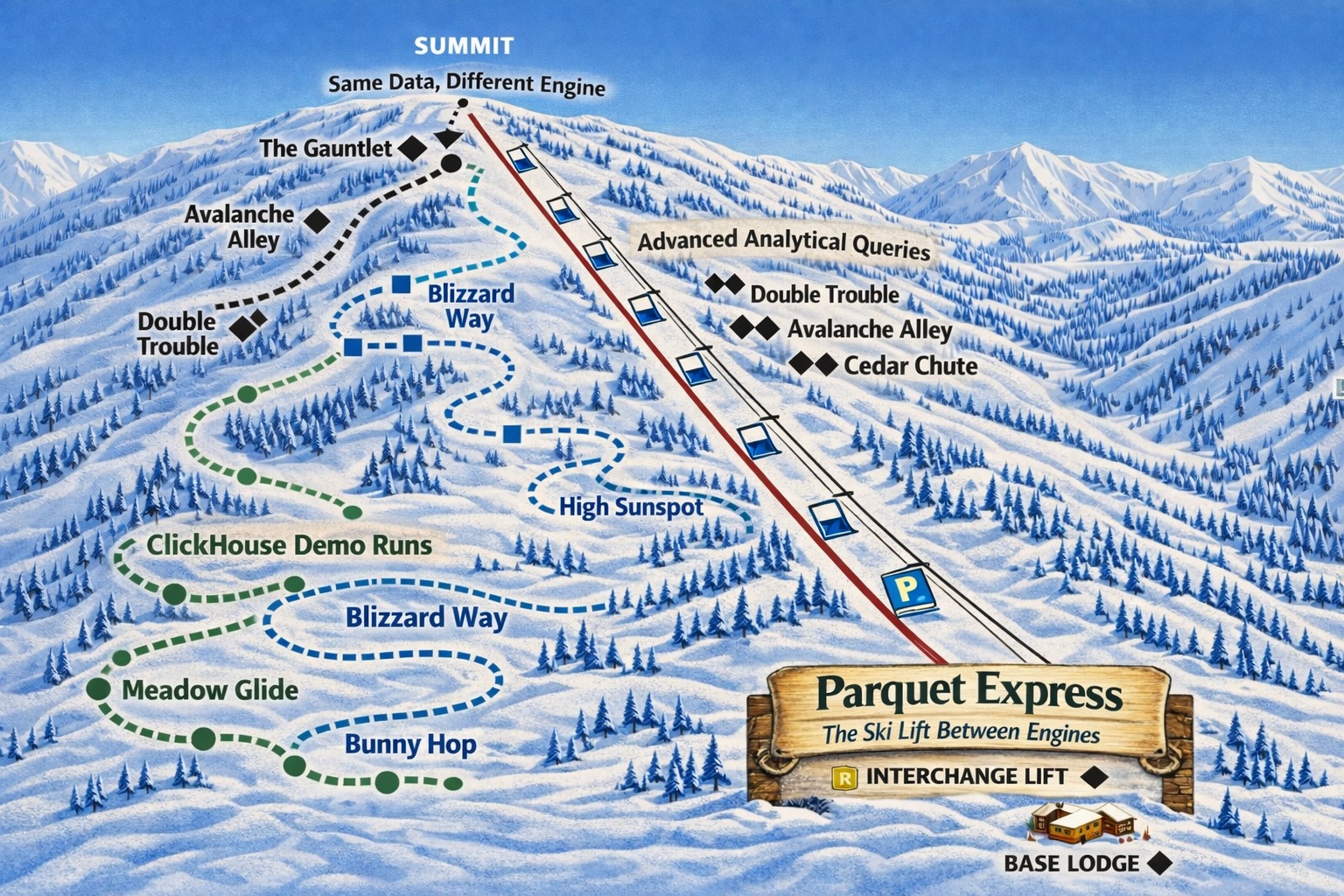

CedarDB Tames the Slopes (of the graph)

It would be natural to ask about the connection between skiing, or taking to the slopes, and database systems. That connection arose out of the way I related to the graph, below, that I created for a recent POC, showing the relationship between the time to run 750 queries against a table, for various row counts, in PostgreSQL, TimescaleDB, and CedarDB.

In skiing terminology, the blue line might be a black diamond run, the green one a beginner run, and the grey line along the x-axis is almost in an entirely different category and more fit for cross-country skiing. What blew my mind was that the slope of the CedarDB line was essentially zero.

The motivation for these database comparisons is a SQL query workload to support an app built by a biotech startup. The app interacts with their hardware device, which collects telemetry based on a user’s activities and, among other things, provides access to a library of content that the user might enjoy. The team at this startup provided us with some background on their database challenges, and that included lists such as top 20 slowest queries and top 20 heaviest queries. After analyzing these, we identified a query that appeared high on both lists, one that informs their recommendation pipeline, so we started there:

SELECT count(*) AS count_1

FROM (

SELECT track_plays.time AS track_plays_time

, track_plays.end_time AS track_plays_end_time

, track_plays.user_id AS track_plays_user_id

, track_plays.session_id AS track_plays_session_id

, track_plays.track_id AS track_plays_track_id

, track_plays.duration_sec AS track_plays_duration_sec

, track_plays.source AS track_plays_source

FROM track_plays

WHERE

track_plays.time >= $1

AND track_plays.end_time <= $2

AND track_plays.user_id = $3

AND track_plays.duration_sec >= $4

) AS anon_1;

Looking at it, it’s not complex: just a table scan with a filter on a couple of TIMESTAMP and INTEGER columns.

How Timescale Makes the Slope Beginner Friendly

Credit where credit is due, let’s first take a look at how TimescaleDB managed to flatten the slope considerably for our POC customer.

TimescaleDB is a PostgreSQL extension designed to optimize the processing of time-series data, which is exactly what the track_plays table tracks.

To really see the effect above with TimescaleDB, you must explicitly set it up using Hypertables,

which partition the data by the time column using hourly partitions (chunk_interval). This, however, requires advance knowledge of your data and query patterns.

...

WITH

(

timescaledb.hypertable,

timescaledb.partition_column='time',

timescaledb.chunk_interval='1 hour'

);

TimescaleDB can then use the partitions at query time, selecting only those relevant to the query, which drastically speeds up many time-series workloads. While it’s reasonable to assume that you often know this kind of information beforehand, unfortunately, TimescaleDB’s Hypertables are not without tradeoffs:

- Foreign key constraints are not supported between hypertables.

- UPDATE statements that would move a row between chunks are not allowed.

- All columns used for partitioning dimensions must be included in a unique index.

- The time column used for partitioning cannot contain NULL values.

While these tradeoffs are acceptable for many workloads, the question is: Do you need to make them?

So, Where’s the Secret Sauce with CedarDB?

Having seen how a use-case specific database system can make workloads beginner friendly, let’s take a look at how CedarDB manages to play a different sport entirely. How do I account for the vastly different performance results I observed for this workload? After all, TimescaleDB is specifically built to tackle just this type of time series data challenge.

While the performance increase is a mix of a lot of innovations,

I want to highlight the one aspect I think best maps to what Hypertables do in TimescaleDB, the concept of “early pruning”.

When scanning a table, CedarDB manages to check many predicates on metadata only, avoiding to scan blocks that don’t qualify entirely.

In this instance, since a timestamp is just a 64 bit integer, timestamp comparisons are just integer comparisons internally, and

CedarDB is able to just skip most data blocks when filtering for a time range, like the heavy-hitter query does.

How is it able to achieve this? Here are some key aspects contributing to that:

- CedarDB stores large chunks of data together into compressed blocks.

- CedarDB stores statistics for each block (e.g., max value, min value, count).

- If there is no value in a given data block that qualifies, per the query predicate, CedarDB avoids scanning that block.

- Time-series data is often loosely inserted in timestamp order. Even if the order is not perfect, overall timestamps close together are stored in the same block.

- Filters are especially useful for pruning in a time series data workload, where it’s common to filter by a timestamp column (e.g., all values of the last month), as illustrated here.

Sounds familiar? That is exactly the effect Hypertables have in TimescaleDB, just on all columns instead of one and without needing to specify anything beforehand.

And the beauty of it is that you get all this without sacrificing flexibility in other areas.

Some Takeaways

What else did I learn from this exercise, besides that I need to get ready for skiing season? Here’s what I’ve jotted down:

The observations represented by the graphs up above inspired me to continue, with more data, so I moved the test jig off my M4 MacBook and onto an r7i.8xlarge instance in EC2. There, I gradually increased the row count in this table (via a data generator), from 25 M rows, where I recorded a cumulative runtime (750 queries) of 79 ms, to 1.13 billion rows, where the runtime was 96 ms. That 1.13B rows is 180x the size of the data point represented by the right-most point in the graph, yet the query runtime in CedarDB remained essentially constant.

… So, while CedarDB is amazing on the 8 vCPU & 32 GB RAM of my MacBook Docker setup, when it has access to the 32 vCPU & 128 GB of RAM of the larger EC2 instance, it takes full advantage of all that CPU and memory.

The efficiency here takes on two forms: (1) you pay less for IaaS or data center and (2) simplification through simplified DDL, fewer indexes, and the ability to consolidate multiple workloads onto a single, general purpose database.

Finally, this radical performance boost may embolden you to consider some new use cases you may have been hesitant to explore using your existing database engine.

Note: If the folks we’re collaborating with on this give us the “OK”, we’ll share the code used here and update this with links.

Call to action: If you’re curious and want to give CedarDB a try in AWS, the CloudFormation template that I used to automate setup of my CedarDB in EC2 can help you get started pretty easily.

Thank you!

If you’d like to discuss your data challenges with us, we’d love to hear from you.

Appendix: The Nuts and Bolts

To investigate how this workload would perform in CedarDB, we needed a few things: Here’s the DDL for In PostgreSQL, our DDL was similar, but didn’t contain the Finally, in CedarDB, the DDL from Postgres reduces to just this (note the absence of indexes): This is a sample row of generated data: The Bash script that drove the test runs: Here’s the progression of data generation used to create the graphs: Data loading, TimescaleDB: PostgreSQL: And CedarDB: What I noticed: CedarDB’s load time is less than 10% of the time taken by either TimescaleDB or Postgres,

which isn’t surprising given CedarDB doesn’t require any indexes for this workload, so it avoids the costs

associated with them. For each of the three DB’s, and for each of the data sizes we measured, four runs were measured; the average

of these values appears in the data table below. Here is an example test run: What the Test Runs Looked Like: And the resulting data, from which the graphs were created:Show Benchmark Setup

track_plays (DDL below).track_plays, in TimescaleDB. A couple of things to note:time column, using hourly partitions (our generated data covered a single day), allowing queries

to target only the relevant chunks, drastically improving performance compared to a standard, unpartitioned table, especially for large time-series datasets.CREATE TABLE public.track_plays

(

"time" timestamp with time zone NOT NULL,

user_id text NOT NULL,

session_id text NOT NULL,

track_id text NOT NULL,

duration_sec integer NOT NULL,

source TEXT DEFAULT 'default_source'::TEXT NOT NULL,

end_time timestamp with time zone

)

WITH

(

timescaledb.hypertable,

timescaledb.partition_column='time',

timescaledb.chunk_interval='1 hour'

);

CREATE INDEX ON public.track_plays USING btree (end_time);

CREATE INDEX ON public.track_plays USING btree (session_id, "time" DESC);

CREATE INDEX ON public.track_plays USING btree ("time", end_time, duration_sec);

CREATE INDEX ON public.track_plays USING btree ("time", user_id, end_time, duration_sec);

WITH ... portion defining the hypertable.CREATE TABLE public.track_plays

(

"time" timestamp with time zone NOT NULL,

user_id text NOT NULL,

session_id text NOT NULL,

track_id text NOT NULL,

duration_sec integer NOT NULL,

source TEXT DEFAULT 'default_source'::TEXT NOT NULL,

end_time timestamp with time zone

);

postgres=# select * from track_plays order by random() limit 1;

time | user_id | session_id | track_id | duration_sec | source | end_time

------------------------+--------------------+------------------+-------------+--------------+--------+------------------------

2025-11-01 11:40:35+00 | fransisco.jolliffe | 6d473b55db1c71cb | track_85767 | 395 | music | 2025-11-01 11:47:10+00

(1 row)

#!/bin/bash

# This will print -runs X -threads lines of output; e.g. for 25 runs and 30 threads, there will be 750 lines of output

if [ -z ${DB_URL+x} ]

then

echo " DB_URL must be set"

echo ""

echo " Example: export DB_URL=\"postgresql://postgres:postgres@localhost:5432/postgres?sslmode=require\""

echo ""

exit 1

fi

# Build Go app if the EXE isn't present here

[ -x ./go-pgx-tester ] || go mod tidy && go build .

# Obtain initial and final time bounds from generated data

t0=$( psql "$DB_URL" -c "\\timing off" -tAc "select min("time") from track_plays;" | tail -1 | perl -ne 's/ /T/; s/\+00$/Z/; print;' )

t1=$( psql "$DB_URL" -c "\\timing off" -tAc "select max("time") from track_plays;" | tail -1 | perl -ne 's/ /T/; s/\+00$/Z/; print;' )

# List of users

users=$( psql "$DB_URL" -c "\\timing off" -tAc "with a as (select distinct(user_id) from track_plays) select string_agg(user_id, ',') from a;" | tail -1 )

./go-pgx-tester \

-db "$DB_URL" \

-start $t0 \

-end $t1 \

-max-dur 450 \

-min-dur 30 \

-runs 25 \

-sleep 100us \

-threads 30 \

-users $users

# Print which DB ran the test

psql "$DB_URL" -c "\\timing off" -tAc "select version();" | tail -1

# Print number of rows in the table

n_rows=$( psql "$DB_URL" -c "\\timing off" -tAc "select count(*) from track_plays;" | tail -1 )

echo "Row count: $n_rows"

$ ./data_gen_track_plays_sleep_states_duration.py --users 100 --sessions-per-user 15 --sleep-cadence-sec 5 --minutes-per-session 720 --start '2025-11-01T00:00:00Z' --sleep-csv sleep_states.csv --tracks-csv track_plays.csv --tracks-only

$ ./data_gen_track_plays_sleep_states_duration.py --users 100 --sessions-per-user 30 --sleep-cadence-sec 5 --minutes-per-session 720 --start '2025-11-01T00:00:00Z' --sleep-csv sleep_states.csv --tracks-csv track_plays.csv --tracks-only

$ ./data_gen_track_plays_sleep_states_duration.py --users 100 --sessions-per-user 60 --sleep-cadence-sec 5 --minutes-per-session 720 --start '2025-11-01T00:00:00Z' --sleep-csv sleep_states.csv --tracks-csv track_plays.csv --tracks-only

$ ./data_gen_track_plays_sleep_states_duration.py --users 140 --sessions-per-user 75 --sleep-cadence-sec 5 --minutes-per-session 720 --start '2025-11-01T00:00:00Z' --sleep-csv sleep_states.csv --tracks-csv track_plays.csv --tracks-only

$ ./data_gen_track_plays_sleep_states_duration.py --users 281 --sessions-per-user 75 --sleep-cadence-sec 5 --minutes-per-session 720 --start '2025-11-01T00:00:00Z' --sleep-csv sleep_states.csv --tracks-csv track_plays.csv --tracks-only

$ ./data_gen_track_plays_sleep_states_duration.py --users 570 --sessions-per-user 75 --sleep-cadence-sec 5 --minutes-per-session 720 --start '2025-11-01T00:00:00Z' --sleep-csv sleep_states.csv --tracks-csv track_plays.csv --tracks-only

$ psql "postgresql://postgres:postgres@localhost:15432/postgres?sslmode=disable" -c "COPY track_plays FROM stdin DELIMITER ',' NULL '\\N' ESCAPE '\' HEADER CSV" < track_plays.csv

Timing is on.

COPY 6220985

Time: 54162.184 ms (00:54.162)

$ psql "postgresql://postgres:postgres@localhost:15432/postgres?sslmode=disable" -c "COPY track_plays FROM stdin DELIMITER ',' NULL '\\N' ESCAPE '\' HEADER CSV" < track_plays.csv

Timing is on.

COPY 6220985

Time: 60835.740 ms (01:00.836)

$ psql "postgresql://postgres:postgres@localhost:5432/postgres?sslmode=require" -c "COPY track_plays FROM stdin DELIMITER ',' NULL '\\N' ESCAPE '\' HEADER CSV" < track_plays.csv

Timing is on.

COPY 6220985

Time: 10038.192 ms (00:10.038)

$ ./run_test.sh

Starting workload: threads=30 runs/thread=25 time=[2025-11-01T00:00:00Z .. 2025-11-02T11:59:55Z] dur=[30..450] users=460 timeout=15s

2025/11/14 10:58:07 [w15] ok run=0 uid=milana.katsma time=[2025-11-01T06:51:43Z..2025-11-01T07:17:03Z] dur=[301] count=131 latency=4.547542ms

2025/11/14 10:58:07 [w22] ok run=0 uid=robert.gottreich time=[2025-11-02T07:25:17Z..2025-11-02T08:10:53Z] dur=[38] count=609 latency=4.853083ms

...

2025/11/14 10:58:14 [w06] ok run=24 uid=con.gustafson time=[2025-11-01T08:24:13Z..2025-11-01T08:50:51Z] dur=[274] count=358 latency=614.541µs

2025/11/14 10:58:14 [w15] ok run=24 uid=matute.gluck time=[2025-11-02T02:22:44Z..2025-11-02T02:44:23Z] dur=[59] count=507 latency=580.792µs

Done in 130.279042ms

PostgreSQL 16.3 compatible CedarDB v2025-11-06

Row count: 6220985

N_Rows Postgres (ms) TimescaleDB (ms) CedarDB (ms) 218,380 248 128 127 436,700 523 183 130 873,437 853 301 133 1,528,447 1267 488 136 3,067,366 6932 1312 127 6,220,985 15180 2847 132